<Gated Feedback Recurrent Neural Networks>

1. This paper introduces gated-feedback RNN(GF-RNN), which is inspired by clockwork RNN(CW-RNN). The CW-RNN implemented this by allowing the i-th module to operate at the rate of  , where i is a positive integer, meaning the module is updated only when t mode

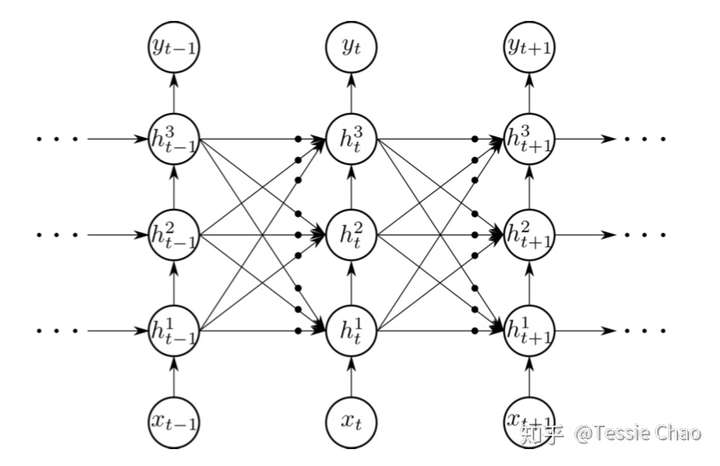

, where i is a positive integer, meaning the module is updated only when t mode  = 0. This makes each module to operate at different rates. In addition, they precisely defined the connectivity pattern between modules by allowing the i-th module to be affected by j-th module when j>i. (I think it is because when j-module is updated, we can be sure that i-module is updated.) Similar to the CW-RNN, the author partitions the hidden units into multiple modules in which each module corresponds to a different layer in a stack of recurrent layers. the author let each module operate at different timescales by hierarchically stacking them. Each module is fully connected to all the other modules across the stack and itself. The recurrent connection between two module, instead, is gated by a logistic unit which is computed based on the current input and the previous states of the hidden layers. It is called a global reset gate.

= 0. This makes each module to operate at different rates. In addition, they precisely defined the connectivity pattern between modules by allowing the i-th module to be affected by j-th module when j>i. (I think it is because when j-module is updated, we can be sure that i-module is updated.) Similar to the CW-RNN, the author partitions the hidden units into multiple modules in which each module corresponds to a different layer in a stack of recurrent layers. the author let each module operate at different timescales by hierarchically stacking them. Each module is fully connected to all the other modules across the stack and itself. The recurrent connection between two module, instead, is gated by a logistic unit which is computed based on the current input and the previous states of the hidden layers. It is called a global reset gate.

The network is as follows:

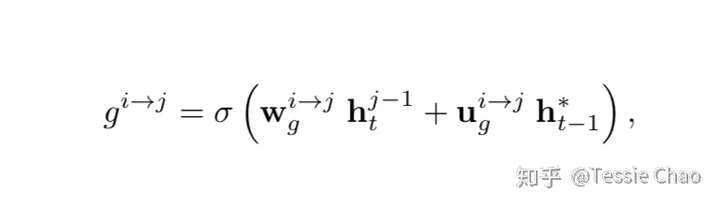

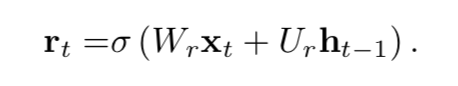

Bullets correspond to global reset gates, which is computed as:

is the concatenation of all the hidden states from time step t-1. The superscript means transition from layer i to layer j. w and u are weight vectors. In other words, the signal from to is controlled by a single scalar g.

is the concatenation of all the hidden states from time step t-1. The superscript means transition from layer i to layer j. w and u are weight vectors. In other words, the signal from to is controlled by a single scalar g.

2. We can see the GF-RNN further allows information from the upper recurrent layer, corresponding to coarser timescale, flows back into the lower recurrent layers, corresponding to finer timescales.

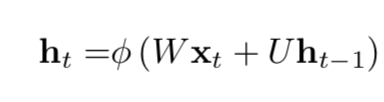

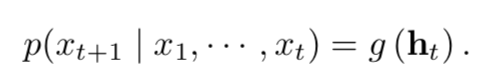

3. Recurrent Neural Network. The formula below shows how to compute hidden states.

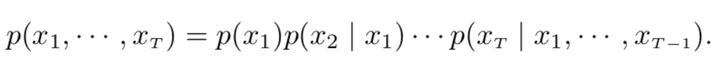

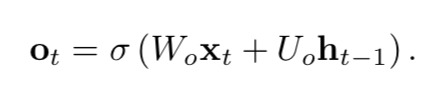

And language modeling:

And we train an RNN to model this distribution by letting it predict given

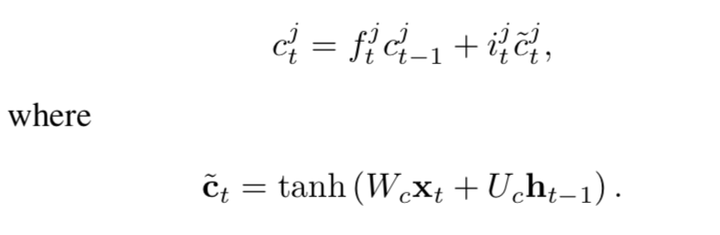

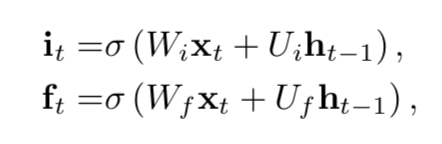

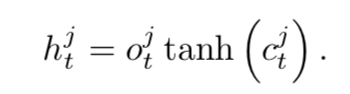

4. Long Short Term Memory

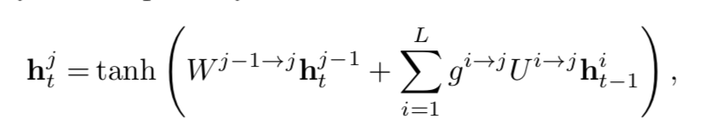

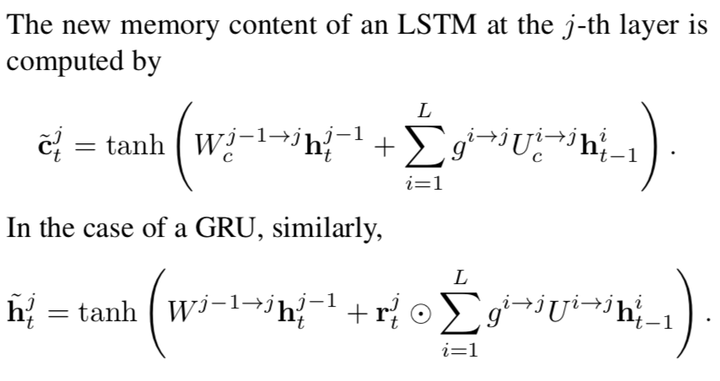

An LSTM unit consists of a memory cell , an input gate , a forget gate , and an output gate means the content of j-th LSTM unit at time step t(means j-th dimension of . The formulas are as follows:

The input and forget gates control how much new content should be memorized and how much old content should be forgotten, respectivaly. The output gate controls to which degree the memory content is exposed.

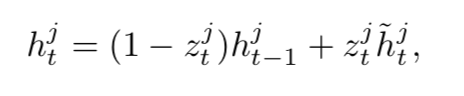

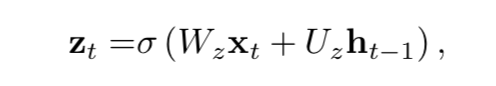

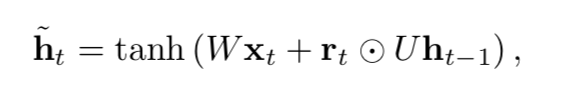

5. Gated Recurrent Unit

and

respectively correspond to the previous memory content and the new candidate memory content. The update gate

controls how much of previous memory content is to be forgotten and how much of the new memory content is to be add.

Reset gate decides how much to ignore previous hidden states.

The reset mechanism helps the GRU to use the model capacity efficiently by allowing it to reset whenever the detected feature is not necessary anymore.

6. Considering GF-RNN, the gates are modified like that:

7. There are two experiments, Language Modeling and Python Program Evaluation. See the paper for more details.